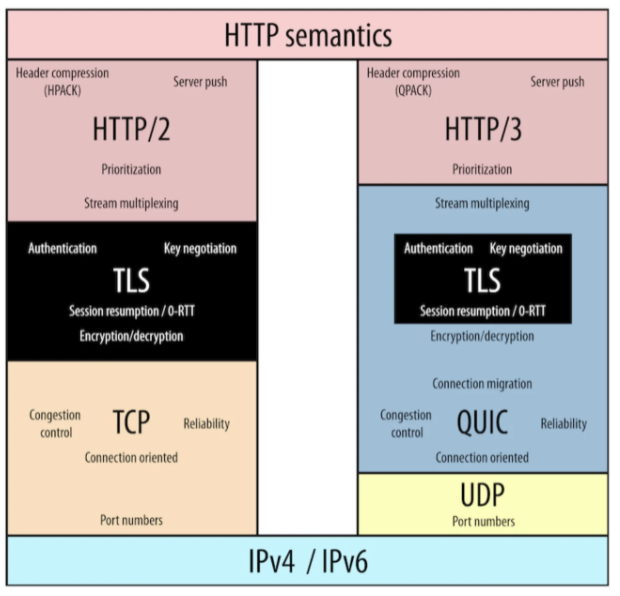

The Internet (IP), Transmission Control (TCP), Hypertext Transfer (HTTP), and Transport Layer Security (TLS and SSL) protocols have long under-pinned the World Wide Web. Each provides a layer of abstraction focusing on specific concerns. Over time enhancements have been made to improve performance, security, and privacy, as well as extend functionality as the web has evolved. HTTP/3 with QUIC generally available in 2019 and TLS-1.3 generally available in 2018 are the latest broadly adopted protocols for web traffic providing enhancements over HTTP/1.1, HTTP/2, and TLS-1.2.

In this article we will dive into the details of HTTP/3 with QUIC and TLS-1.3, including HTTP/2, after first building up to these new technologies layer by layer.

The Networking Stack

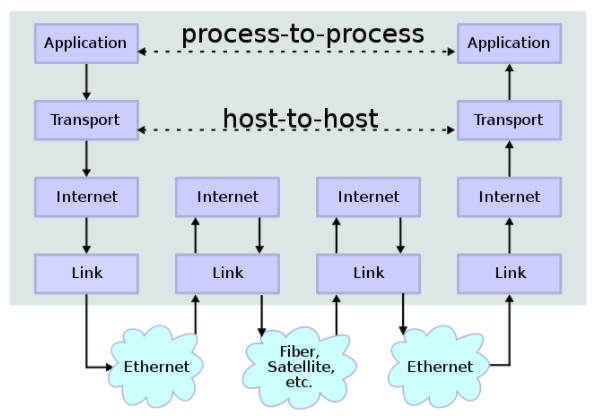

Web requests consist of multiple layers of abstractions referred to as the networking stack. Though different models represent these abstractions differently, there are largely four layers. The lowest layer of abstraction is the Link layer responsible for communication between adjacent network nodes (eg. Ethernet, WiFi/IEEE-802.11). Next, the Internet layer is responsible for communication between any two network nodes across network boundaries (eg. IPv4, IPv6). Third, the Transport layer is responsible for message transmission including connection-handling, reliability, flow-control, and multiplexing (eg. TCP, UDP). Last, the Application layer is responsible for app-level message-handling including syntax, semantics, authentication, and caching (eg. HTTP, DNS, SSH, MQTT, SMTP, WebSocket, WebRTC).

Link Communication and the Internet Protocol (IP)

The two most fundamental layers of the World Wide Web networking stack are the link layer and the internet layer. Whereas the link layer facilitates communication between two adjacent locally connected network nodes, the internet layer facilitates communication between two globally-connected network nodes (eg. IPv4, IPv6).

The Internet Protocol (IP) is a connectionless packet-based protocol. The widely adopted IPv4 was standardized in 1981 by DARPA (RFC791). IPv4 uses a 32-bit address space with roughly 4 trillion addresses (2^32). As the Web exploded through the 1980s it quickly became apparent the 32-bit address space would restrict the public internet. NAT and CIDR technologies were introduced in 1993 to reduce the rapid depletion of IPv4 addresses. Whereas prior to CIDR, IP addresses could only be allocated in blocks of 8, 16, or 24 bits (classes), with CIDR IP addresses could be allocated along any address-bit boundary. This allowed networks and subnets to be more appropriately sized to need. In conjunction, network address translation (NAT) enabled mapping IP address space into another. This enabled large private networks with minimal allocation of public IP addresses. For example, with a NAT an entire private network can be assigned a single public IP address. Though CIDR and NAT technologies bought valuable time before IPv4 address exhaustion, a larger address space was still needed eventually as the Web continued to grow rapidly.

IPv6 was drafted in 1996 and standardized in 2017. IPv6 alleviates the depletion of addresses with a 128-bit address space supporting 2^128 addresses. Since IPv6 is not interoperable with IPv4 its adoption has been slow, but support is steadily increasing.

TCP and UDP

The Transport layer is responsible for end-to-end message transmission including connection-handling, reliability, flow-control, and multiplexing. This is also referred to as Layer 4 with reference to the OSI networking stack model. Load balancers often operate at the Transport layer (L4 load balancing), but can also operate at the Application layer (L7 load balancing). The two best known protocols at this layer are TCP and UDP. Later we will see how QUIC operates at the Transport layer as well.

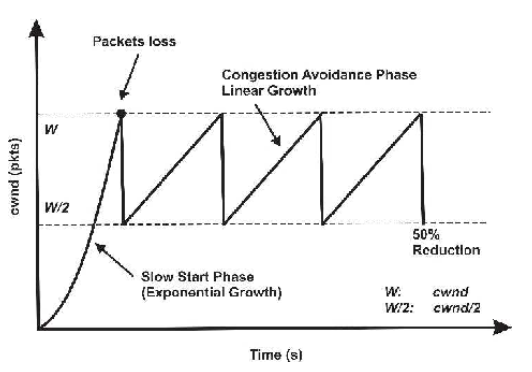

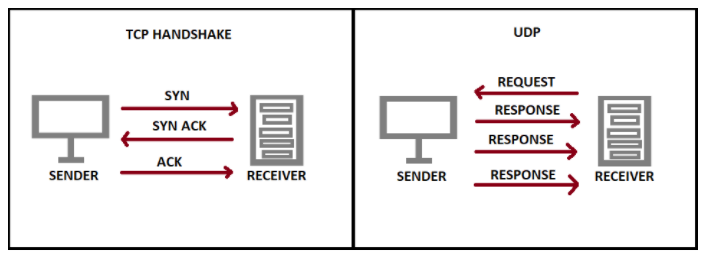

The Transmission Control Protocol (TCP) specification was first published in 1974 (RFC675) and was adopted alongside IPv4 during the early days of the World Wide Web. TCP provides a stateful connection, prioritizing reliability over performance. Data is split into segments and a multi-step handshake establishes the TCP connection. Given IP traffic will run into network congestion and IP packet loss/duplication/reordering, TCP has mechanisms to reliably handle these issues. For example, retransmission of lost packets, reordering of out-of-order segments, buffering of packets to control transmission-rate, and checksums for error detection. As a TCP connection is established it slowly increases the rate of transmission until it begins to detect issues. TCP is commonly used for file sharing, websites, and internet applications.

The User Datagram Protocol (UDP) specification was first published in 1980 (RFC768) and unlike TCP offers connectionless transmission, prioritizing performance over reliability. Unlike TCP, UDP offers no mechanisms for ordering, congestion-control, or reliability. UDP is used for DNS and commonly for multimedia streaming, VoIP, and multiplayer video games.

HTTP, TLS/SSL, and HTTPS

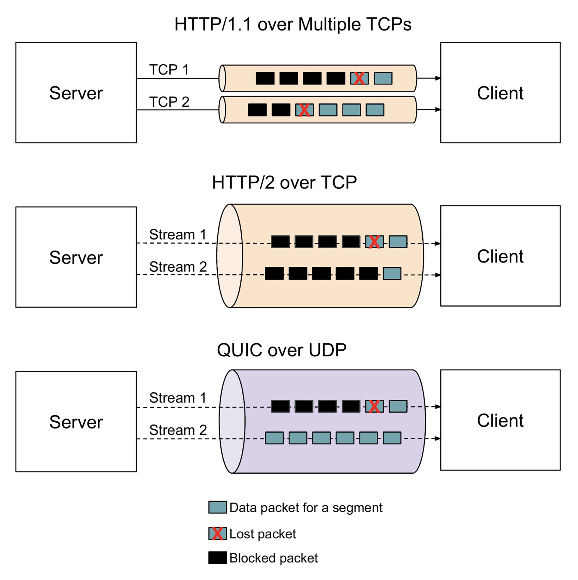

HTTP was initially developed in the late 1980s and early 1990s to provide a request/response hypertext (eg. HTML webpage) specification on top of TCP while supporting intermediary networking nodes. The first prototype was completed at CERN in 1989 by Tim Berners. HTTP/1.0 and HTTP/1.1 were then standardized in 1996 and 1997 (RFC1945). HTTP/1.0 initiated a separate connection per resource, whereas HTTP/1.1 allows for the reuse of connections across multiple resources. Though HTTP/1.1 supports multiple parallel requests, browsers still limit the number of open connections allowed at a time (eg. 6 in Chrome and Firefox). Head-of-line blocking becomes an issue in HTTP/1.1 when more than X resources are requested in parallel. Pipelining was introduced to try to tackle this but had trouble gaining wide adoption and was superseded by HTTP/2 multiplexing.

Each resource in HTTP is referenced by a URI. HTML is used for rendering and may include URI’s to navigate between pages. Multiple types of user agents may initiate a request including a web browser, web crawler, mobile app, or any other software system.

Transport Layer Security (TLS) and the now deprecated Secure Sockets Layer (SSL) are cryptographic protocols for confidential, unmodifiable, and authentic communications between software applications. The SSL standard was iterated on in the late nineties before evolving into TLS-1.0 in 1999 and later TLS-1.2 in 2008. TLS encrypts the content/body of a web request securing it from attackers intercepting traffic. Because it is built atop TCP as a separate protocol, the URI sent over TCP is not hidden as part of the TLS encryption. Certificates are used with asymmetric keys in conjunction with well-known Certificate Authorities to establish authenticity. Symmetric keys are used after the initial handshake for high performance. HTTPS is an extension of HTTP using SSL/TLS to encrypt web traffic. More than 75% of web requests use HTTPS today. See An Introduction to Secure Web Communications - HTTPS for a deep-dive into TLS and HTTPS.

HTTP/2

HTTP/2 was the first major revision of HTTP in more than twenty years when it was standardized in 2015 (RFC7540). HTTP/2 continues to rely on TCP and is derived from an earlier experimental protocol developed by Google called SPDY. HTTP/2 improves performance through several mechanisms: HTTP headers are compressed to reduce transmission size, multiplexing multiple concurrent requests over a single TCP connection is supported to avoid head-of-line blocking, and prioritization of requests is also supported. A big improvement here is the ability to break apart multiple concurrent requests into frames, interleave them, and then receive multiple responses over that same connection. HTTP/2 also introduces the capability for servers to push content to clients optimistically over an established TCP connection, called Server Push. In practice, pushed resources are only used in a web browser when the browser attempts to make a matching request.

HTTP/2 makes major improvements over HTTP/1.1, most importantly by addressing the HTTP head-of-line blocking issue, but still contends with head-of-line blocking at the TCP layer. When a TCP packet is lost or reordered, all streams are halted.

HTTP/3 with QUIC and TLS-1.3

HTTP/3 using QUIC was first implemented at Google in 2012. Jim Roskind designed the initial protocol and implementation at Google, the same software engineer who in 2008 helped design the TLS-1.2 protocol (RFC5246). QUIC aims to be nearly equivalent to TCP but with much-improved latency and elimination of TCP head-of-line blocking. QUIC also improves switching between networks by leveraging connection identifiers on the server which allow clients to re-establish connections.

Update (2021): HTTP/3 using QUIC has been standardized in 2021 (RFC9000). Together QUIC and HTTP/3 have steadily picked up adoption with all modern web browsers now supporting it - Chrome, Firefox, Safari, Microsoft-Edge - and a majority of Chrome traffic to Google servers are currently using QUIC. As of 2021 more than 20% of websites use HTTP/3.

Whereas HTTP/1 and HTTP/2 were built upon TCP, HTTP/3 with QUIC is built upon UDP. It maintains consistent HTTP semantics but leverages QUIC to fully address the head-of-line blocking still apparent in HTTP/2 due to its reliance on TCP. Though HTTP/2 improves the head-of-line blocking issue, it still must contend with it at the TCP-layer; anytime a packet is lost or reordered, TCP still causes all active streams to halt. With QUIC only the active stream is halted. In poor network conditions, this performance improvement in HTTP/3 is even more apparent.

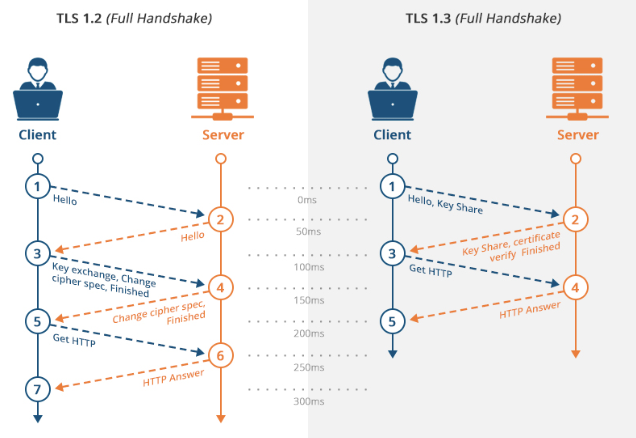

TLS requires a handshake process with symmetric key-exchange. QUIC sends the TLS key-exchange as part of the initial connection request. TLS-1.3 improves performance with a simpler TLS handshake, requiring only up to 3 packets from the 5 to 7 packets required in TLS-1.2. TLS-1.3 eliminates support for static RSA keys, relying on Diffie-Hellman keys which are ephemeral keys derived from initialization data from both parties and less likely to be compromised. By relying exclusively on Diffie-Hellman keys TLS-1.3 sends the inputs needed for key generation during its initial “hello” response as part of the handshake. This eliminates an entire round-trip from the TLS handshake. Further, when revisiting a site, clients can send data on the initial message using a pre-shared key (PSK), eliminating all initial round-trips from the handshake (0-RTT). This can result in a ~25% performance improvement.

Image references:

- Sevket Arisu, Ertan Yildiz, Ali C. Begen. 2019. Game of protocols: Is QUIC ready for prime time streaming?