Cloud computing brought web applications and computing resources out of office server rooms and into online providers’ managed data centers. Containerization took this further, simplifying highly distributed systems by allowing multiple applications to run independently on a single virtual machine. The next revolution in cloud computing is here, to many the full realization of infrastructure in the cloud, providing the potential for massive cost savings, increases in performance, and further reduction in infrastructure. Event-driven serverless computing is a game changer.

Here we will discuss the pros and cons of serverless computing or Functions-as-a-Service (FaaS) . Part 2 will discuss how to build your next application within a serverless architecture, along with an example Github repository showing a working AWS Lambda / API Gateway / Terraform / Serverless project.

What Brought Us Here

Cloud computing with it’s on-demand resources were a revolutionary shift from the bare-metal and reserved instance models of days past. Amazon, a leader in this shift, started offering their core EC2 and S3 services nearly a decade ago now and we have witnessed first hand it revolutionize the web sector. Organizations now alleviated from having to manage their own hardware or needing to maintain their own remote virtual machines, could focus on their core competencies - their products and customers.

Containerization with Docker then made waves several years ago when it introduced it’s open-source virtualization software. Amazon’s Container Service (ECS) with Docker containers gave birth to new capabilities, wherein multiple applications could run within isolated containers on a single virtual machine. Like a traditional VM, Docker containers provide independent memory space, processes, network interfaces, users, and file system, without the resource overhead of a traditional VM.

Serverless culminates these advances with the idea that rather than hosting an application 24/7, no matter the current load, organizations may upload individual functions that are instantiated within milliseconds on request. These instances remain totally ephemeral in nature, only remaining active for the length of the function execution. This concept introduces the potential for huge gains in cost and performance, while providing a further layer of abstraction of infrastructure.

Why (or why not) Serverless?

Web servers are often idle more than they are actively executing. In fact, Amazon shows an average server utilization of under 20 percent! This is a huge waste in both resources and cost. Lambdas, Amazon’s serverless offering, remedies this by charging only for the fractions of a second your service is actively executing. Rather than a comparable server’s (EC2 or ECS) uptime of hours, days, or months, a Lambda is charged at 100 millisecond increments of their execution time, not uptime. One only pays for the time a Lambda is responding to a request or event, typically a few hundred milliseconds, resulting in a massive cost savings.

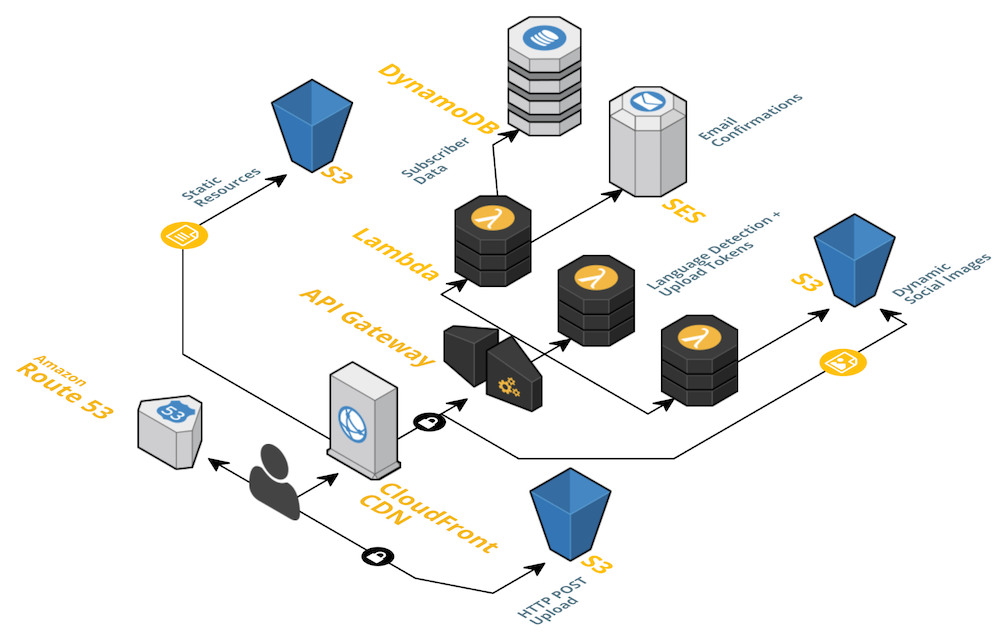

In addition to cost savings, with a serverless architecture through Amazon Lambdas, a further layer of abstraction is provided to reduce infrastructure management requirements. Lambda functions are instantiated as necessary to scale to incoming requests and events. With API Gateway, incoming HTTP requests can be routed to function definitions or a Lambda can listen for events off S3, Dynamo, etc. and thousands of parallel Lambda instances can be fired up. As load increases, one’s Lambda instance count increases automatically and transparently to the developer, managed entirely by AWS. This eliminates the need for defining load balancers and auto scaling groups to manage high availability and scalability requirements. Hosting a service becomes as simple as uploading function definitions to AWS Lambdas and defining routes in API Gateway.

With its many upsides, a serverless architecture via AWS comes with a few caveats that must be considered. First and foremost one may likely need to increase their Lambda concurrency limit. Amazon by default limits concurrent Lambda executions to 1000 per account, which can quickly become a problem for web-scale services or for large organizations operating under a single AWS account. A limit increase request for one’s AWS account can solve this.

As load increases and more Lambda executions are required, Amazon will fire off additional Lambda executions, but this is limited to 500 additional Lambda instances per minute. This can be problematic for exceptionally spikey traffic patterns. Some teams have solved this by “pre-warming” their Lambdas, artificially increasing requests and therefore Lambda executions to prep for spikes in traffic.

Hot starts versus cold starts are another consideration when building a serverless architecture in AWS. Lambda instances being ephemeral in nature will terminate after execution is complete, but not before a cooldown period of ~15 minutes. If a request comes in during this cooldown period the Lambda instance will be reused, resetting the cooldown. This is what is referred to as a “warm start”, as opposed to a “cold start” when a new Lambda instance must be instantiated. Since “warm starts” respond to requests significantly quicker than “cold starts” many teams are keeping their Lambda instances alive and “warm”, by sending a blank request every ~15 minutes. This incurs a minimal fraction of a cent charge each time, but keeps the Lambda instance alive with a low response latency.

Maximum execution time is another limitation with Lambdas. Though a Lambda instance can stay live for any length of time, a single request can not run longer than approximately five minutes. This is a rather long maximum duration and shouldn’t be a problem for most, but if developing a long running job, such as DB maintenance, a single Lambda call will not suffice.

Finally, serverless architectures being quite new, there are a share of of tooling woes, specifically around logging, monitoring, and debugging. Though through CloudWatch and the Serverless application framework much of this is remedied.

Benefits

- Significant cost savings for most services

- Potential performance gains

- Transparent auto-scaling and high-availability

Limits

- Maximum execution time constraints for long-running tasks

- Concurrency limits for high traffic services within a single AWS account

- Auto-scaling speed for spikey traffic patterns

- Limited tooling around logging, monitoring, and debugging

Though there are a number of caveats, AWS Lambdas and a serverless architecture can provide substantial benefit for many. Serverless architectures can provide massive cost savings, performance gains, and decreased infrastructure management, but as always consider your specific use-case accordingly. Check back or follow along on Twitter, when next we walk through the steps to build your next application on AWS with Lambdas and API Gateway, the design decisions you’ll want to consider, and the successes we have enjoyed.