Amazon’s vast array of web services continues to grow, expanding on its already comprehensive offerings for web solutions of all kinds. Meanwhile its market share continues its dominance (nearly threefold that of Microsoft, Google, or IBM) as web solutions of all kinds are utilizing Amazon Web Services to great success. Leaders in tech, from Netflix and Airbnb, to NASA, Intuit, and Spotify have all embraced the Amazon cloud platform. When tasked with building a new web solution, benefits and drawbacks should always be evaluated on a per-case basis, but it’s increasingly hard to deny the overwhelming benefits of utilizing Amazon Web Services.

Using Amazon’s services for some time now myself, I felt it time to put my know-how to the test with the Amazon Web Services Solutions Architect Certification. This has required brushing up on a number of AWS services, from virtual private containers, security groups, routing tables and subnetting, to Dynamo data modeling and partitioning policies. To conclude these studies and best validate my understanding of all of these Amazon Web Services, I put together a quick-and-dirty full-stack web solution that incorporates as many Amazon offerings as I could sensibly fit. You can check it out here on GitHub.

Here, I wanted to walk through the technologies and steps to building your next web solution in AWS. In an effort to keep this somewhat focused, this will be geared towards building a React/Redux client application, a Node/Express REST API, and then the AWS architecture for hosting and wiring it all together in a secure and scalable fashion, automated with HashiCorp’s Terraform (also see CloudFormation). With the many resources out there detailing specific pieces, here we will try to bridge the gap of tying it all together!

Building the Front-End

A declarative, component-based, highly-performant view layer in React, along with Redux for state management and managing asynchronous complexity, will allow us to create a snappy experience for our end users. This one-two combo has been one of my favorite advances in the fast moving front-end world recently.

The client application is made up of static JavaScript, HTML, and CSS bundled together with Webpack and rendered within the client’s web browser. Using React and Redux the client application handles routing, optimistic updates with our backend, and rendering content updates in the DOM efficiently.

We use Redux Thunk middleware for managing asynchronous HTTP requests to our backend API in a composable manner. (In a future post I’ll write to why I’ve increasingly enjoyed the use of Redux Saga and Redux Loop rather than thunks).

An example client application created for this exercise can be seen here on GitHub.

Building the Back-End

The back-end is the REST API responsible for handling HTTP requests from our front-end and communicating with our managed Amazon services (eg. SQS, Dynamo, RDS). It could be expanded upon to authenticate and integrate with custom-built mobile apps or other third-party services as well.

Node.js was chosen for the back-end as its development speed and performance is fantastic. It has increasingly become an industry go-to for building REST APIs, and though you may find benchmarks telling whichever story you fancy (dependent on libraries, how the software is built, etc), I have been continually impressed with the performance achieved by Node with relatively minimal development time and hardware. Node’s event-driven, non-blocking I/O underpinnings, and vast NPM ecosystem of open-source libraries, along with Google’s rampant advances with their V8 engine, make it a great technology choice.

Express is the de facto standard server framework for Node.js, though there are a number of other players to consider. Express will handle routing, parsing, and negotiation of our requests.

An example API backend created for this exercise can be seen here on GitHub.

AWS Environment Setup and Security

With our application built it’s time to start diving into AWS. A few principles we must keep in mind: high availability, scalability/performance, and security. Luckily Amazon will handle a lot of this for us if our architecture is setup correctly!

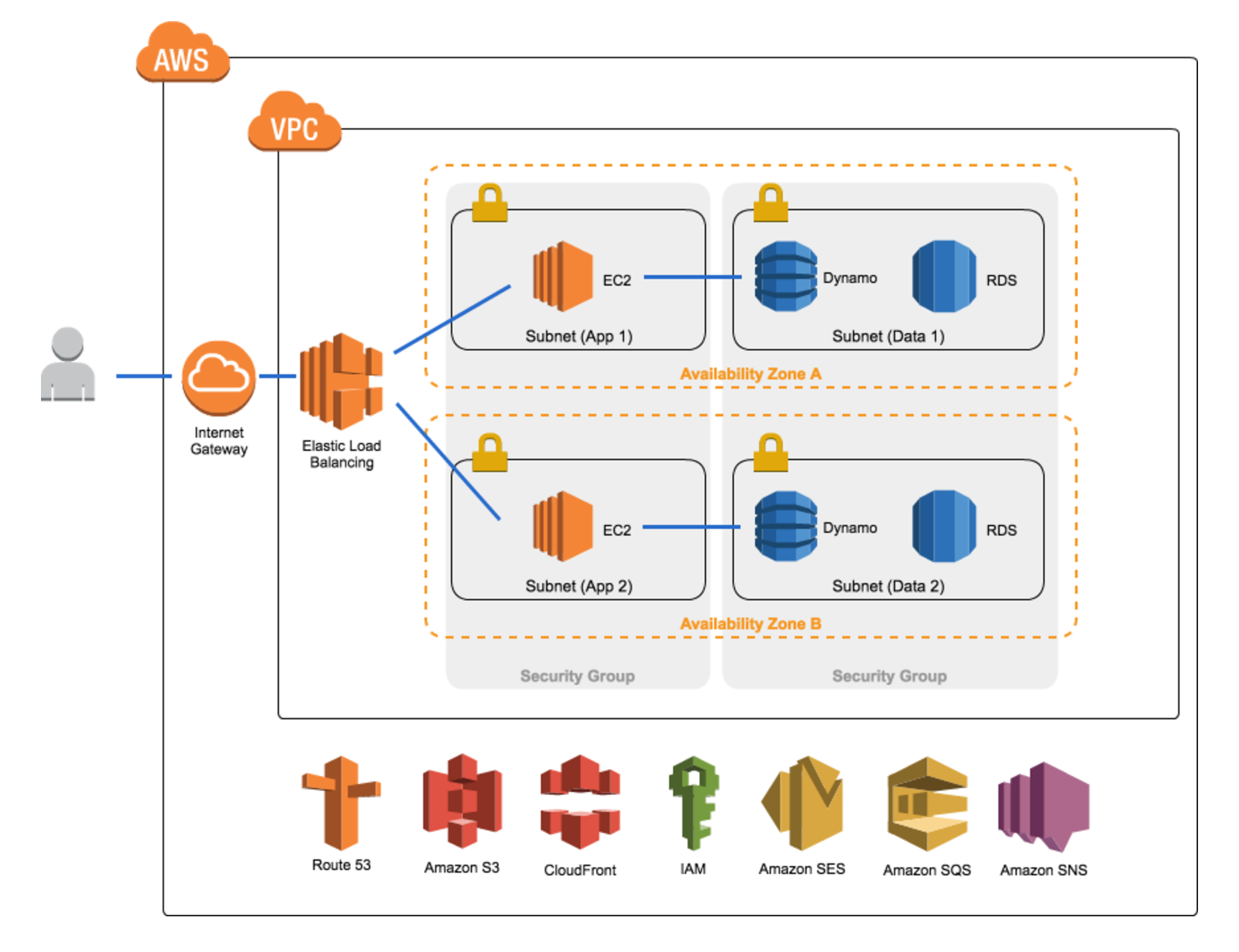

First and foremost we need to set up our Virtual Private Cloud. If you have used AWS since 2013, chances are you’ve used an AWS VPC. VPC’s provide a secure isolated environment for our application with full control over internal IP address ranges, subnets, and network access, similar to a traditional on-premise network.

Within the VPC we create subnets, or a range of IP addresses denoted by a CIDR block. Though a VPC spans multiple availability zones, each subnet resides in a single availability zone, so we will want to ensure we set up multiple subnets to maintain high availability in the case of disaster. We will also need multiple subnets, public and private, to conform to security best practices. Our public subnets will be accessible from the public internet via an AWS Network Gateway, whereas our private subnets will be locked-down without access from the internet, though we can still allow outbound internet access via a NAT for software updates etc. NATs are available both through an AMI hosted via an EC2 instance or through the AWS NAT gateway service. The managed NAT gateway will give you high availability and redundancy for free whereas the AMI will give you complete control of the NAT.

Network Access Control Lists are then configured for our subnets. These act as firewalls, controlling traffic in and out of our subnets. Every subnet is associated with a network ACL, defaulting to the VPC network ACL. Network ACLs contain numbered lists of rules that are evaluated in ascending order to determine whether traffic is allowed in or out. All inbound and outbound traffic is denied unless a rule explicitly states otherwise.

In addition to network ACLs, we can set up security groups for the Amazon services we will be building out. Security groups act as a virtual firewall at the instance level rather than at the subnet level (see a comparison here). Security groups contain a list of inbound and outbound ‘allow’ rules, with anything not listed being denied. Unlike network ACLs, security groups are stateful, meaning no matter the inbound rules, requests from our services allowed outbound will always have their responses allowed inbound. For example, a security group for our database layer may allow all outbound traffic, but only inbound TCP traffic over port 3306 from IP addresses within our VPC. This would mean no external traffic could enter the DB layer unless initiated from within, while allowing our back-end instances to make DB calls.

An example Terraform architecture created for this exercise can be seen here on GitHub.

EC2 Instances, AMIs, and Load Balancing

Next we set up our servers where we’ll use AWS EC2 or Elastic Compute Cloud. EC2 instances are resizable virtual instances for our application where we will host our back-end API (see also AWS ECS). EC2 instances are ephemeral and so information stored on them are temporary. Therefore persistent storage such as EFS (Elastic File System) or EBS (Elastic Block Store) should be used as needed, though we’ll be aiming to keep our backend stateless so this won’t be needed.

In another post I hope to delve into the new serverless architecture pattern utilizing Lambdas and AWS API Gateway.

AMIs or Amazon Machine Images are what will be booted up within each of our instances. Custom AMI’s are created from an Amazon base AMI, with tools like Packer, Puppet, Ansible, Chef, Salt, etc. being great here for provisioning the image. Alternatively, with basic setups you can stick to a base AMI and have startup scripts do what you need. When you launch AWS EC2 instances you can pass in cloud init data that is executed server-side on boot. In the example GitHub repo I listed you’ll see I do exactly that as a shortcut to install our application and dependencies on instance startup.

Focusing on high availability and scalability we want to utilize horizontal scaling of our application. Amazon’s Auto Scaling Groups manage our EC2 instances, increasing the instance count and thus our overall capacity as needed. As traffic decreases they too will elastically decrease the instance count to save on resources. Using a healthcheck heartbeat, the auto scaling group will intelligently shut down and bring up new instances when critical errors arise. The health check can be configured to use different protocols, including transport layer (eg. TCP) and network layer (eg. HTTP), by utilizing one or more listeners.

Within the auto scaling group, an Elastic Load Balancer will distribute traffic amongst the running instances to maintain high performance (see also the new AWS ALB’s). We’ll use a multi-AZ load balancer with instances in various availability zones for high availability in case of disaster. When traffic is received the ELB will route the traffic to an appropriate instance across availability zones ensuring requests are handled quickly and efficiently as our application scales.

Database Layer (Relational or NoSQL)

To store and query data associated with our application we will need a database. The database technology you choose will depend on a number of factors: the access patterns you anticipate, the quantity of data, and prioritizing availability versus consistency to name a few. Part of this decision will include whether you will want a traditional relational database or a NoSQL database. This is an area of particular complexity, where a one-size-fits-all answer would be inadequate.

At a high level, you will want to consider a relational database such as those on AWS RDS if data integrity (strong typing/schemas, data atomicity, data consistency) is highly desirable or the data is inherently relational and queries will require many joins. A NoSQL database such as AWS DynamoDB should be considered if massive write throughput is required, flexible schemas are desired, or your data is hierarchical/graphical in nature. Redis or AWS ElastiCache should also be examined for caching and alleviating load from your databases. More than this I’ll leave for another article!

S3 Client Hosting and CloudFront CDN

Now we are ready to publish our client application to AWS S3 or Simple Storage Service. Along with EC2, S3 is one of AWS’s flagship services. S3 will manage our high availability and data durability needs for hosting the client application; in fact S3 boasts 99.999999999% durability and 99.99% availability metrics. That’s pretty darn impressive! Simply uploading the Webpack bundle with our React/Redux client to S3 and setting its ACL to public-read will do the trick.

To reduce latency and make our client application really snappy, we can utilize Amazon’s content delivery network, CloudFront. CloudFront is a network of global edge locations for caching our static content and bringing it closer to end users. CloudFront will optimize our application’s performance by reducing latency. Now users in Germany or China don’t have to wait for our static assets from across the ocean. A CDN can also be used to combat DDOS or distributed denial of service attacks by filtering malformed requests and blocking origin geos.

IAM Roles and Securing Cloud Credentials

Amazon’s Identity and Access Management service gives us granular access control over our resources. Here we register user accounts for developers and administrators, create and assign users to groups, and create roles to grant access for our services. Amazon emphasizes the philosophy of least privilege which we’ll adhere to, only granting the minimal permissions needed to our users and services.

We’ll need to create a user for ourselves, as it’s best to never use your account’s root credentials. We’ll also need to generate public/private key pairs via a user for our automation tooling (ie. Terraform).

To grant our backend instances access to the database and other AWS services, we’ll assign a role to it. Along with cloud init data, we can assign a role to our EC2 instances on startup. This role will need access to our database, as well as any other services we may be using. Granting credentials via an IAM role is great because it removes the complexity of distributing credentials securely to each instance on start and the need to rotate said credentials. It also means we don’t need to store our secret credentials on EC2.

An alternative method to pass credentials to our EC2 instances would be via a secure S3 bucket. To do this we would lock down an S3 bucket to be only accessible from our EC2 boxes through a custom S3 ACL and a VPC endpoint. Prior to communicating with other services our instances would then need to fetch these credentials from S3 and store them in an environment variable or temporarily within an application variable.

Route53 DNS

With our client application and backend in place, it’s time to set up our DNS configuration in AWS Route53. Route53 will connect incoming requests to the Amazon web services we have set up as well as provide DNS failover. Once we’ve registered our application’s domain either through Route53 or another provider we must configure our Route53 DNS entries.

First, we create a Hosted Zone for our application. This is a logical grouping of DNS entries to associate with our app. There are a number of DNS entry types, but we’ll focus on NS, SOA, and A entry types here. By default, for a public hosted zone, Amazon will create nameserver (NS) and start of authority (SOA) entries for us. The name server record set associates the four Route53 nameservers to be used with our app, while the start of authority record set stores meta information about our domain.

From these four name servers we need to instruct Route53 to resolve our registered domain name with the IP address of our client app. To do this we use an A type record set. A type record sets are for mapping domains to IPv4 addresses and come with a variety of routing policies - simple, weighted, latency, failover, and geo. They each provide a unique purpose and should be noted carefully; a simple routing policy routes traffic directly from a domain to an IPv4 address, weighted directs traffic proportionally to multiple addresses, latency directs traffic based on lowest latency, failover directs traffic to a backup resource in case of disaster, and geo directs traffic based on the request geolocation.

For this example, we’ll first create two A record sets with simple routing to our CloudFront distribution hosting our client application. One of these entries will have our domain with a ‘www’ prefix the other without. We’ll also create a third A record set with a failover routing policy so we can direct users to a pleasant custom error page in case of failure. The failover routing policy comes with a number of configurations of it’s own, but for simplicity we’ll use an active-passive configuration. Then we’ll just need to associate a health check with our app. Now when our app fails we will have DNS failover to a custom error page.

Wrap Up

It is key to use automation in the creation of these many pieces of setting up a scalable, secure, and highly available architecture. AWS offers us CloudFormation for this very reason or you can use my preference - HashiCorp’s Terraform. Using Terraform we can declaratively write out our entire architecture, from security groups, to Dynamo tables, to IAM roles. This not only provides automation but allows us to version control our architecture.

There are many more services we could discuss as well - Simple Queue Service for decoupling our services, Simple Notification Service for SMS messaging, Simple Email Service for automated emailing, ElastiCache for back-end caching, Cloud Trail for logging all AWS changes. The list goes on!

This should have provided a high-level walkthrough of building out, end-to-end, a web solution in AWS. Rather than the many great articles detailing each piece in our stack, I hope I offered a comprehensive look at how all these pieces of technology work together and the steps involved in building out your next application in AWS.

Please feel free to email me with any thoughts, comments, or questions! Follow me on Twitter and continue visiting my blog - AustinCorso.com for follow-up posts.