This three-part series compares 8 widely-adopted programming languages. Before diving into how the languages compare we discuss the key functions and internals of programming languages and runtimes at large. Part 1 covers compilation and data-types, part 2 memory-management and concurrency, and part 3 a language comparison. The 8 languages were chosen based both on adoption and personal experience working with them. They are categorized into 3 broad groups: compiled low-level, compiled high-level, and interpreted. Each language supports different programming language paradigms which we discuss first.

Paradigms

Programming languages can be classified into one or more paradigms; common paradigms include: procedural, object-oriented, and functional. These paradigms encompass the execution model, state management, and code organization of the language. Modern languages often support multiple paradigms.

The imperative paradigm features state which may be modified from multiple locations in code and at different times. Procedural and object-oriented languages fall under this classification. Procedural languages (eg. C) focus on the code executed as a list of instructions, whereas object-oriented languages model data into objects that contain the state they operate on, typically from a class definition. Many older languages use procedural programming which is closer to how machine-code is processed. Object-oriented programming largely evolved out of procedural programming, promoting encapsulation.

The declarative paradigm with functional programming features sequences of stateless functions composed together and disallows mutating non-local state, labeled “side-effects”. Higher-order functions are supported where functions may be passed directly as arguments and returned from other functions. Recursion and immutability are emphasized. Functional programming is rooted in mathematics and has historically been less popular than imperative programming. A few purely functional languages include Lisp, Clojure, and Haskell. Several modern languages support multiple paradigms including support for functional programming (eg. Javascript, Java) which has increased adoption of functional-programming. Understanding these paradigms, we’ll dig into compilers.

Compilation

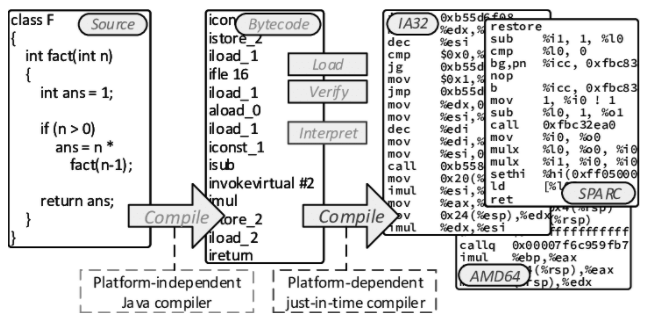

The key function of a programming language is to turn logic with human-readable syntax into machine-code a computer may execute. Programming languages are turned into machine-code through a compilation process which (1) turns the code into an abstract intermediary representation such as a syntax-tree, (2) applies optimizations, and then (3) generates machine-code. Often compilation is executed as multiple phases: first compiling high-level languages to an intermediary language and then again to machine code.

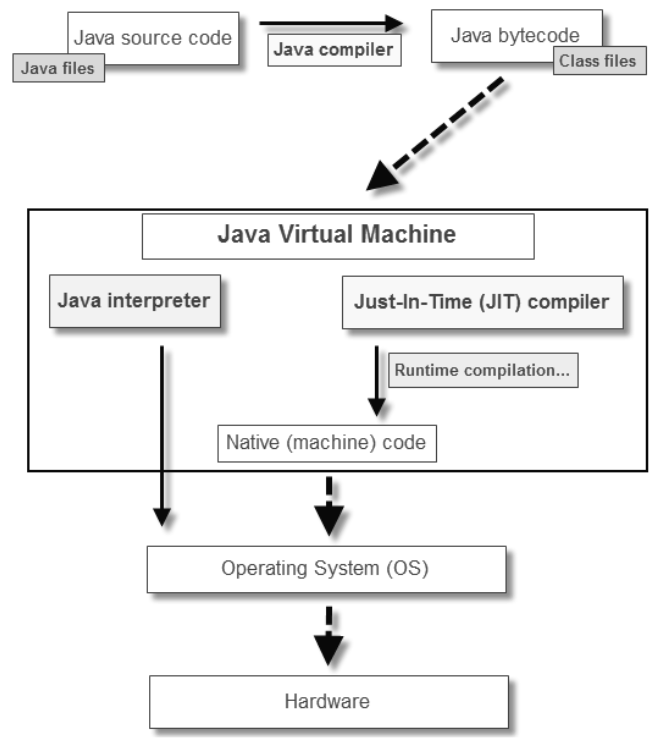

For compiled languages this happens ahead-of-time (AOT) during build-time, whereas for interpreted languages this is done at runtime. Build-time compilation is generally favored for its smaller runtime environment, faster startup, and more sophisticated optimizations. For example, C and Go are compiled languages which build directly to CPU-architecture-specific binary executables.

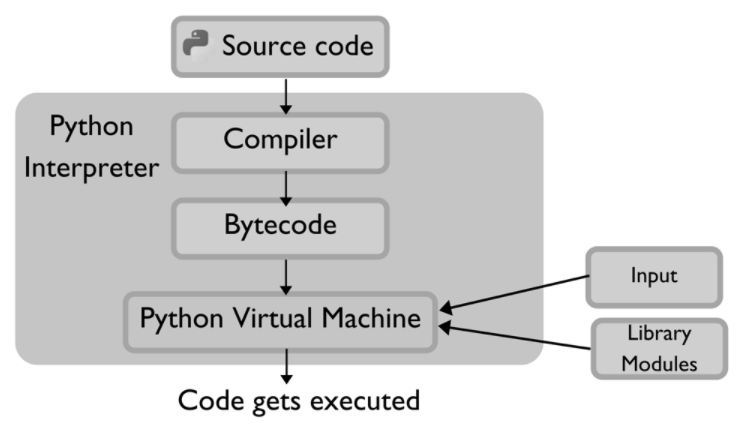

Interpreted languages are generally favored for flexibility and rapid development without ahead-of-time compilation (eg. CPython, Ruby MRI, PHP Zend), though they can hinder runtime performance as interpreters convert source code into machine code each time the program is run and have far fewer optimizations. This is best thought of as a spectrum, with some compiled languages having interpreters and some interpreted languages having build-time compilers. Languages may even offer either option based on the chosen implementation - interpreted or compiled.

Several modern languages leverage the best of both ahead-of-time compilation and interpreters through Just-In-Time compilation (JIT). A JIT reads code at runtime, compiles it to machine-code once, and then executes it. Just-In-Time compilers have seen adoption for their flexibility over a pure AOT compiler to adapt to the runtime environment with far better performance than a pure interpreter. Java, for example, uses a compiler to turn Java code into JVM byte-code and a JIT to translate the JVM byte-code into machine-code at runtime, though Java supports several options (eg. C1, C2). After the JIT compiles the byte-code, it doesn’t need to on subsequent invocations. This is one reason Java code can become faster after the first execution. Python’s PyPy implementation also uses a JIT.

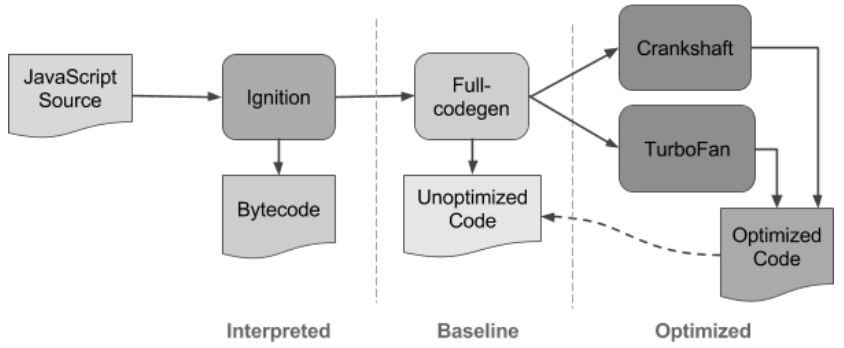

The Javascript (JS) V8 engine for Chrome and server-side Node.js uses both an interpreter and a JIT. V8 is designed this way as Javascript is often executed in the browser where quick first execution of code is paramount. Upon execution, the V8 Ignition interpreter reads the JS source code for quick startup, while the Turbofan JIT in parallel compiles to byte-code, optimizes it, and then replaces the interpreted code with the JIT compiled machine-code. This results in JS code starting quickly with improved performance.

Though compilation varies between languages, in the general-sense it can be broken down into three phases. Step one involves semantic validation, type-checking, and tokenization - transforming the code into an abstract intermediary representation (eg. abstract-syntax-tree). Step two involves optimizations including removing unreachable code and optimizing control flow (eg. loops). Step three involves generating either byte-code for a VM or machine-code for a specific CPU architecture.

Binding, the process of connecting calls to an object or interface with the concrete class, can be done at build-time (eg. C, static methods in Java) or at run-time (eg. overriding a method in Java). With late-binding at run-time, the compiler does not lookup methods until runtime resulting in the ability to examine object properties at runtime (type-introspection), alter object methods at runtime (type-reflection), and select the method implementation at runtime (dynamic-dispatch). Since it does require lookups at run-time, run-time binding tends to be slightly less performant than build-time binding while offering greater flexibility. Related to binding is dependency injection, the practice of inverting control of dependency resolution; rather than an object instantiating dependencies, the dependency-injector specifies and injects the dependencies into the object. This separates the concerns of object-creation from object-use and makes testing a breeze. Dependency injection libraries may support build-time (eg. Java Dagger) or run-time binding (eg. Java Spring/Guice). Language support for dependency injection varies. Related to binding are type-systems.

Type-systems

Type-systems are an important element of programming languages that formally define the interfaces between code with data structures and data types. As applications grow and are worked on by many engineers and teams, a type-system becomes absolutely imperative. Type systems are deeply integrated into development tools, allowing contributors to quickly determine the data type and properties of each variable. Without types, understanding existing software requires a time-consuming deep-dive through the code-base and documentation. Type-checking also validates interfaces and types, preventing software bugs. Type-checking can happen statically at build-time (eg. C, Java, Kotlin, Go, Rust, TS) or dynamically at run-time (eg. Python, Ruby, PHP, JS). Static-typing with build-time type-checks offers improved performance, validation, and tooling, whereas dynamic-typing with run-time type-checks offers flexibility. Dynamic type-systems use “duck typing”, evaluating an object’s structure and type compatibility rather than an explicit type-declaration.

Some type-systems allow for unions/intersections to combine types (eg. Typescript), generics to allow for multi-type methods with type-safety (eg. Java, Kotlin), or traits/mix-ins to define shared behavior / method-composition across types (eg. Rust traits, Scala traits, Ruby modules, JS via assign/call/apply methods). Polymorphism, common across languages, supports interchanging different object-types satisfying the same interface.

Statically-typed languages may have nominal typing (eg. C, Java, Rust) or structural typing (eg. Go, TS). Often, pure object-oriented languages will use nominal type-systems, whereas more functional languages (eg. Haskell) will use structural typing. Nominal type-systems evaluate type compatibility using explicit declarations, whereas structural type-systems evaluate type-compatibility using the object’s structure, sometimes called “static duck typing”. Nominal type-systems require explicit inheritance, specifying all class relationships, which can be more verbose. When modeling objects with several distinct behaviors, nominal type-systems can require a large number of classes with complex hierarchies of inheritance to represent all the possible behavior combinations (eg. a Java stream supporting 3 traits - buffered vs unbuffered, writable vs read-only, and seekable vs non-seekable), though generics help with this. Structural type-systems avoid this and provide easier extensibility as subtypes don’t need to explicitly declare implementation of an interface; any type with structure conforming to the interface is compatible. Conversely, structural-typing can be less safe semantically (eg. two Go objects containing an integer but which represent different units).

Further, there is strong-typing (eg. Java, Kotlin, Go, Python, Ruby, TS) vs weak-typing (JS) which refers to if a language will coerce types to complete an operation or throw an error for incompatible types - strong-typing is preferable to avoid data inconsistencies. Plain Javascript with weak-typing is famous for odd type-coercions resulting in data issues when coding best practices are not followed; TS addresses this in part by checking types statically at build-time.

Now that we have covered compilers and type-systems at a high-level, in part 2 we will cover memory management and concurrency. Part 3 will conclude with how 8 widely-adopted programming languages compare.

Image references:

- Orlov, Michael. 2017. Evolving software building blocks

- McIlroy, Ross. 2016. Firing up the Ignition Interpreter