This three-part series compares 8 widely-adopted programming languages. They are categorized into 3 broad groups: compiled low-level, compiled high-level, and interpreted. Before diving into how the languages compare we discuss the key functions and internals of programming languages and runtimes at large. Part 1 covered compilation and data-types. Here, in part 2, we cover memory-management and concurrency. These are all key differentiators when comparing programming languages.

Memory Management

Programming languages all need a way to allocate, reference, and release memory space containing blocks of data from system hardware. This hardware is usually Random Access Memory (RAM), which allows quick reading and writing of short-lived data. Data may also be stored on CPU caches or hard disks. Most language runtimes use a similar stack and heap-based structure. Stack memory is used for smaller temporary data isolated to the thread using continuous blocks of memory. Each method has its own stack frame which is flushed when the method returns. The heap on the other hand is shared across threads and is typically used for storing objects. It is slower with much more storage capacity.

There are largely two ways languages go about allocating memory - either they require the developer to manually allocate blocks of memory and manually release memory, or it is handled automatically by the compiler and garbage collection. Garbage collectors are runtime systems which observe references to a variable and reclaim the memory once all references are no longer used, out of scope. Many modern programming languages utilize garbage-collection and it is a key differentiator between low-level system-focused languages like C and Rust versus high-level application-focused languages like Java, Python, Ruby, and JS/TS. Rust uses a borrow checker, a build time alternative covered later in part 2. When manually allocated memory is not reclaimed it results in memory leaks. Memory leaks hold onto memory for a program after it is no longer used in its execution. Over time, memory leaks will consume all available system memory and cause the application to fail.

Concurrency

Concurrency and parallelism are related concepts that are frequently conflated. Though both involve the tandem evaluation of multiple tasks (series of operations), concurrency is the act of interleaving multiple operations across independent tasks without impacting the final results, whereas parallelism is actually executing multiple independent operations at the exact same time simultaneously. Programs run as a process with one or more underlying kernel-threads. A kernel-thread is the smallest unit of computation managed independently by an operating system scheduler. Multiple threads of a given process may be executed concurrently and share resources such as memory, while different processes do not share these resources.

Parallelism requires multiple processing units (eg. CPU cores, GPU), but even a single processing unit can achieve high levels of concurrency when working on tasks waiting on operations like I/O. Parallelism may be achieved with any programming language given multiple processing units, therefore we will focus on how different languages handle concurrency, threading, and blocking vs non-blocking operations. Concurrency may be implemented with constructs at either the programming language (events, goroutines, coroutines) or at the operating system (kernel-threads).

Operations may be blocking or non-blocking – blocking operations are executed synchronously and wait to return until the operation completes, whereas non-blocking operations are typically executed asynchronously returning immediately with some mechanism to later get the result. Blocking operations can be simpler and easier to reason about than non-blocking async operations.

Managing the sequencing of operations across concurrent tasks and the communication and ownership of data between tasks is complex. Bugs can manifest as race-conditions that are not immediately reproducible and troublesome to debug; behavior can be non-deterministic. The implementation of concurrency is therefore essential to programming language design. Concurrency models are grouped into two classes: shared-memory or message-passing. Different languages we cover approach concurrency very differently and demonstrate different performance profiles. Some concurrency models are more attuned to high-IO operations (eg. HTTP requests, disk reads) versus compute-intensive operations (neural networks, image processing, cryptography).

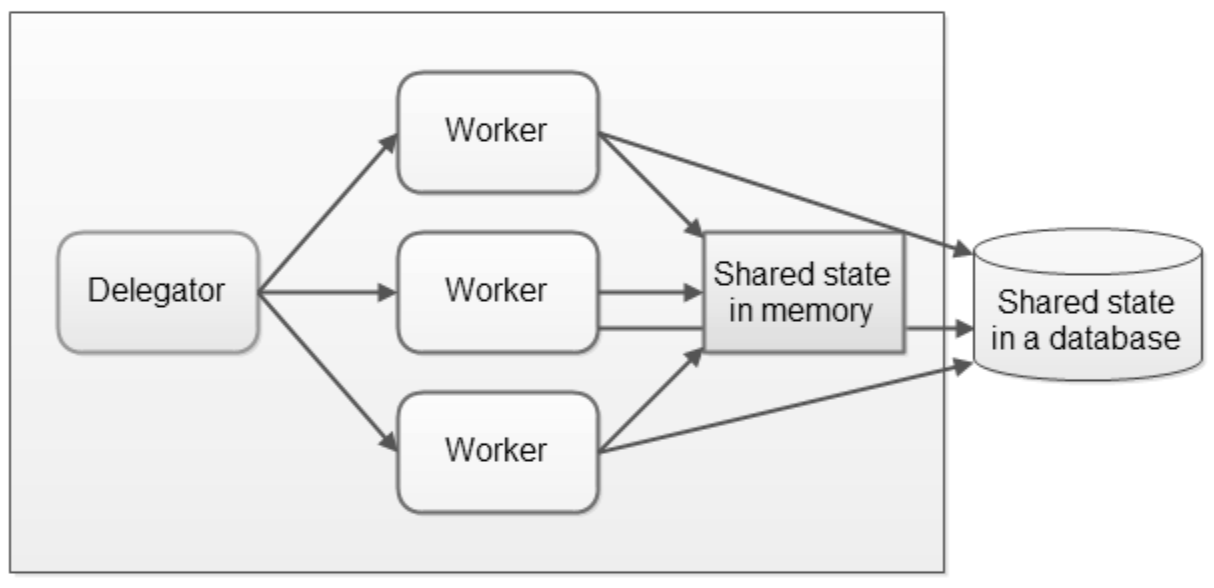

In the shared-memory class of concurrency, concurrent tasks communicate through shared state and require some form of locking or synchronization for coordination. Immutable, versioned data structures, and compare-and-set operations may also be used. Software-only transactional memory (STM) uses language constructs to mark sections of code as atomic, optimistically executes the code, and then rolls it back if necessary. STM only works with shared memory, not with external resources, and requires operations to be irrevocable, with no side effects. Kernel-threads and thread-pools are often used explicitly in these languages to support concurrent execution. For example, Java leverages user-level threads explicitly within the programming language to support concurrency and under-the-hood maps them 1-to-1 to kernel-threads. Threads with shared-memory require mechanisms to synchronize access to shared state between threads.

Synchronization with locks, implicit or explicit, halts all but one thread from accessing a critical section of code and committing data, while other threads wait for it to complete. When many threads are waiting, this is called thread contention. These blocks of code are typically designed to be as minimal as possible to maximize the number of operations which can be executed concurrently and minimize thread-contention. When not implemented properly, shared-state can result in deadlocks when two threads are each waiting on the other preventing either from continuing execution. Locks which are too coarse reduce performance, whereas locks which are too granular increase the danger of deadlocks. Threads and shared-memory often see higher context-switching and memory overhead. Typically shared-memory concurrency relies on blocking operations, but non-blocking operations may also be available to decrease contention and increase performance.

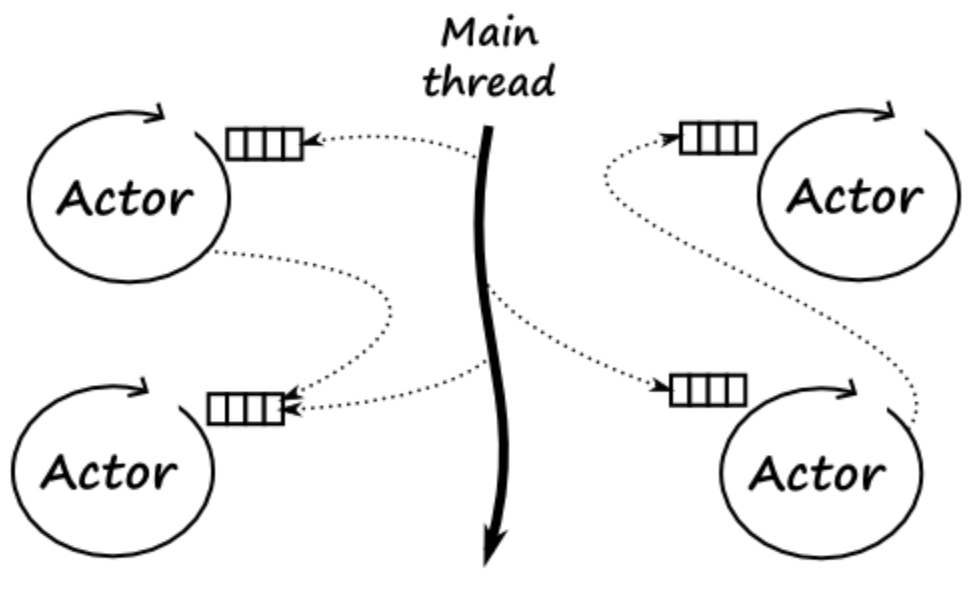

Message-passing based concurrency, sometimes called reactive programming, skips shared-memory and relies on communication between tasks with independent memory. Many concurrent tasks may map to a single kernel-thread, with languages abstracting away their use of kernel-threads under-the-hood. The requestor and the receiver are decoupled - relying on messages or events to communicate. Event-driven approaches (eg. JS/TS) use a loop to continually process concurrent events off a queue as operations complete. When a producer begins an async operation it is registered and the result placed onto the queue as it resolves to be evaluated. Message-driven approaches, rather than register the producer/event, send messages to specific recipients/actors/queues; this can be completed synchronously or asynchronously. Synchronous message-passing blocks a task until another task receives the message, whereas asynchronous message-passing continues execution as soon as the task sends the message, often to a queue. Communicating Sequential Processes (CSP) and the Actor model are two forms of message-passing. CSP (eg. Go) is synchronous and focused on the channels messages are sent over, whereas the Actor model (eg. Scala, Rust) is asynchronous, leveraging queues, and focuses on the actors/entities sending and receiving the messages.

Now that we have covered at a high-level: compilers, type-systems, memory management, and concurrency, in part 3 we will look at how 8 widely-adopted programming languages compare.