The sharing economy has introduced a new wave of online marketplace apps and business models, where consumers and sellers are matched across a unique differentiated set of product inventory. This contrasts with traditional e-commerce platforms, where inventory is standardized and sellers are few. Online multi-sided marketplaces range from Uber and Lyft in gig-economy transportation of people with unique routes to DoorDash and Postmates in restaurant delivery with unique orders, Instacart and GoPuff in grocery delivery with unique pick-up and drop-off locations, TaskRabbit and Amazon Mechanical Turk with unique ad-hoc jobs, Turo and Boatsetter for individual car and boat rentals, Airbnb with unique one-of-a-kind accommodations, and Rover for dog care.

Each of these businesses has successfully created a marketplace that matches customers with a wide variety of sellers offering differentiated products or services, rather than standardized items. This creates a more complex system than traditional retail as it presents a complex optimization problem where we must balance the multiple needs across a diverse set of users - times/dates, locations, qualifications / features / amenities, availability, regional regulations, and so forth. Additionally, the rules and functioning of the marketplace need to be considered carefully to prevent gaming and ensure a fair and transparent marketplace for all parties.

In this 8-part e-book, we will design a multi-sided online marketplace encompassing multiple domains, subsystems, and applications, with principles which can be applied to a wide variety of marketplace and gig-economy use cases from taxi services (e.g. Uber, Lyft), to restaurant delivery (e.g. DoorDash, Postmates), to third-party deliveries (e.g. Instacart, Shipt, Convoy). We’ll focus on a complex three-sided marketplace use-case involving three distinct sets of users: customers making purchases, third-party vendors, and gig-economy drivers. By the end we will have designed a global transportation platform with multi-sided marketplace where (i) consumers can shop through a variety of vendors (3rd-party merchants or taxi services), (ii) have their goods or themselves delivered quickly – as quick as same-hour, (iii) by gig-economy drivers, (iv) either scheduled-ahead or on-demand, (v) all without merchants or taxi companies managing any of the logistical complexity.

We must balance: speed, low-cost, and accurate-ETA’s for customers; consistent, highly-available taxi & delivery services for third-party merchants and taxi companies; and fairness, transparency, and consistency for gig-economy drivers. The platform will support a wide range of transport speeds starting as quick as same-hour with low latency APIs to optimize the checkout flow for maximum conversion. We will support multiple pick-up and drop-off locations, given products and customers will be distributed. Finally, we will support multiple mechanisms for gig-economy drivers to obtain work, either scheduling work ahead to guarantee sufficient working hours or working on-demand with maximal flexibility, obtaining work in real time.

- Part 1: Introduction, Requirements, Mobile and Web Applications

- Part 2: Backend Infrastructure and Service Architecture

- Part 3: Vendor Management and 3rd Party Customer Checkout

- Part 4: Delivery Supply & Demand and Forecasting with Machine Learning

- Part 5: Delivery Route Planning, ETAs, and Dynamic Pricing with Machine Learning

- Part 6: Delivery Driver Preferences, Management, Standings, and Rewards

- Part 7: Push-based Route Targeting and Precomputed Eligibility

- Part 8: Delivery Route Marketplace with On-Demand and Schedule-Ahead Routes and Deferred Resolution Route Matching

Part 2: Backend Infrastructure and Service Architecture

Contents:

- Introduction

- WAF, Edge Router, Authentication, Authorization, Rate Limiting, and Public TLS

- Request Routing, DNS, Service Discovery, Service Meshes, and Private TLS

- Load Balancing

- Compute and Autoscaling

- On-Premise or Bare-Metal

- Virtual Machines

- Containers

- Functions / Lambdas

- Persistence, Caching, File Storage, Search, and Databases

- Caching

- File Storage and Content Delivery Networks (CDNs)

- Relational Databases

- Key-Value, Document, Column, Time-Series, Vector, and Graph Non-Relational Databases

- Search

- Infrastructure-as-Code

- Secrets Management

- Data Composition and GraphQL

- Real-time Notifications and Data

- Conclusion

Introduction

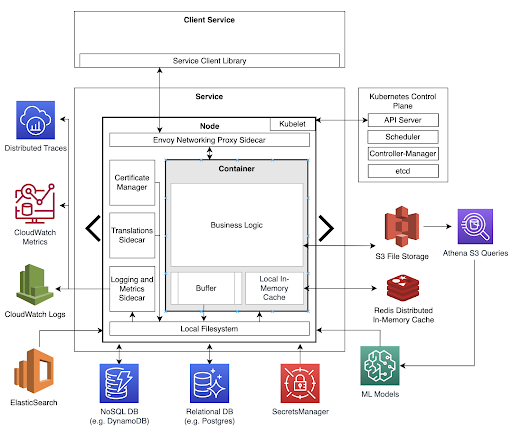

In Part 1, we explored the problem space, identified the multiple types of users, and outlined the solution we aim to design. We examined the user and technical requirements, along with the overall delivery flow of the platform. In this section, we’ll dive deep into the backend infrastructure and our service architecture. This includes key components such as authentication, authorization, service discovery, routing, compute options, databases, caching, secrets management, GraphQL, and real-time notifications. We will detail how and where these components will be utilized to securely track and process deliveries while ensuring scalability and high availability.

WAF, Edge Router, Authentication, Authorization, Rate Limiting, and Public TLS

Requests entering our platform first pass through a Web Application Firewall (WAF) to an edge router. The WAF protects backend domain services from distributed denial of service (DDOS) attacks and blocks malicious actors, including bots. It also guards against common vulnerabilities such as cross-site scripting (XSS) and SQL injection.

The edge router handles public TLS termination, external user rate limiting, authentication, and authorization. It includes modules for verifying user identities and determining roles and permissions. This information is encoded in a JSON Web Token (JWT) and sent with the request, enabling backend services to quickly identify the requestor and assess their access to APIs and data. Once the request clears the WAF and edge router, it is forwarded to the relevant backend domain service leveraging private TLS.

The edge router can be implemented using cloud-hosted solutions such as AWS WAF combined with AWS API Gateway and a custom authorizer. Alternatively, it can be implemented as a custom service using a very low-latency, high-throughput service framework like Netflix’s open-source Zuul gateway built atop nginx, hosted on AWS EC2 or other compute platforms.

Request Routing, DNS, Service Discovery, Service Meshes, and Private TLS

As a request enters the platform or is routed between microservices and subsystems, we need a mechanism to route the request to the correct service IP address. Several approaches are available for routing these network requests.

DNS-Based Routing

A common and default approach is to use the Domain Name System (DNS) to register each service. Each service is assigned a domain-name, and when a request needs to be routed to it, the originating service performs a DNS lookup to retrieve one or more IP addresses. These typically correspond to the service’s load balancer (discussed more in the next section), typically with at least two for redundancy. The load balancer then distributes the request across the service’s internal nodes.

DNS can be public or private. Public DNS is used for public-facing websites via trusted third-party name servers, whereas private DNS is often employed within enterprise networks to reduce security exposure and costs. One challenge, especially with public DNS, is DNS caching. Changes to DNS records can take hours to propagate across the Web. While reducing DNS caching can mitigate this if implemented consistently throughout the network, it can increase latency due to more frequent DNS lookups.

Service Discovery with Service Meshes

Service meshes, such as Envoy, Istio, and HashiCorp Consul, offer an alternative to DNS for service discovery. Custom solutions built with Apache Zookeepr or etcd (CNCF) may also be considered.

In this model, services register themselves directly with the service registry and utilize a network proxy sidecar. Upon startup, the sidecar registers with the service mesh, retrieves updated mappings, and—after passing liveness checks—becomes part of the registry. The service mesh manages traffic routing, client-side load balancing, and other advanced networking functions.

Centralized vs. Decentralized Service Discovery

The service discovery registry can be designed in a centralized or decentralized pattern. A centralized design is implemented as an internal service accessible to all services, whereas a decentralized design distributes the service mappings across client nodes, with each service independently advertising its availability using consensus protocols, minimizing single points of failure.

Routing in Service Meshes

With service meshes, individual service nodes will typically each add their own IP address to the service’s registry mapping, relying on client services to load balance their requests across the pool of IP address servers using a networking proxy. Alternatively, services could use their own internal load balancers and register those, similar to the DNS example above, if desired.

Advantages of Service Mesh

Service Meshes provide several powerful features beyond service discovery and client-side load balancing:

- Shadow Traffic Replication: Enables safe testing of changes with replicated traffic.

- Weighted Routing: Facilitates incremental blue/green or canary deployments.

- TLS Management: Simplifies certificate management, resumption, and termination.

- Protocol Support: Seamlessly supports protocols like gRPC alongside HTTP+REST.

- Circuit Breaking: Improves resiliency by preventing overloads.

- Rate Limiting: Controls the flow of traffic to services.

- Request Monitoring and Tracing: Enhances debugging and performance insights.

Our Approach: Envoy

We will use Envoy for service discovery and request routing. Envoy enables us to safely rollout changes using shadow traffic replication, debug issues with distributed tracing, and efficiently manage load balancing.

Another critical function Envoy will handle is TLS resumption and termination for inter-service communication. While public TLS – using publicly verifiable TLS certificates – is terminated at the edge router for external requests, internal service communication within our network will utilize private TLS. This setup uses certificates issued and signed by our own internal certificate authority (CA). Envoy will encrypt outgoing requests and decrypt incoming requests, ensuring secure communication between services.

Load Balancing

When requests are routed to a service, we need a mechanism to distribute them across the servers, VM’s, or containers hosting that service. Load balancing is typically performed on the receiving end (server-side), but load balancing from the requesting end (client-side) is also an option. This is typically the configuration when using Service Meshes.

Server-Side Load Balancing

Server-side load balancing uses an actual load balancer in front of the service, receives the incoming requests, and routes the request – typically in a round-robin fashion – to one of the nodes in the service fleet.

We will use server-side load balancing for the edge router, where external requests first enter. For this purpose, we can leverage the AWS Network Load Balancer or host our own load balancers on AWS EC2 machines using Zuul and nginx. This approach provides a robust, secure, centralized way to manage incoming external traffic.

Client-Side Load Balancing

For routing requests between services, we will use client-side load balancing enabled by our Envoy sidecars, which handle both service discovery and network proxy functions. In client-side load balancing the client asynchronously receives and caches a list of IP addresses for a service. It then rotates through the list for subsequent requests to that service, distributing the load across the available nodes.

While client-side load balancing can occasionally result in some nodes being selected more frequently and becoming “hot” – especially when new clients spin up – it tends to balance out as traffic and scale increase. This approach is lightweight and integrates seamlessly with our Envoy-based design, providing efficient distribution of requests without relying on a plethora of load balancers.

Compute and Autoscaling

Each service in our platform will be self-contained, adhering to domain-driven design (DDD) principles and implemented using a microservice architecture on Amazon Web Services (AWS). Microsoft Azure and Google Cloud Platform (GCP) are good options too.

For the compute layer, we have a range of options spanning from barebones options with full customization and more operational overhead to highly abstracted options with quick and easy setup. We will evaluate six compute options, listed from the lowest to highest level of abstraction:

- Self-hosted: On-premises infrastructure for complete control.

- AWS Bare-Metal Servers: Physical servers with no virtualization for specialized workloads.

- AWS EC2 (VMs): Virtual machines for flexible and scalable compute resources.

- AWS ECS/EKS: Managed container orchestration using Docker (ECS) or Kubernetes (EKS).

- AWS Fargate (CaaaS): Containers-as-a-Service for managing containerized applications without handling infrastructure.

- AWS Lambda (FaaS): Function-as-a-Service for event-driven compute with serverless architecture.

In the following sections, we will dive deeper into these compute options, examining their features, trade-offs, and use cases to determine the best fit for each service in our architecture.

On-Premise or Bare-Metal

The most barebones option is running our own server hardware in-office, followed by the option to use AWS’s bare-metal dedicated hardware offering. These require teams to maintain the servers, with less scalability, and potentially more cost depending on the scale of traffic versus the fixed provisioned capacity. Costs can sometimes become preferable at very large scale though. AWS’s Bare-Metal instances are not virtualized; in other words, they do not run under a hypervisor like normal EC2 instances do. These instances are optimal for highly specialized workloads where additional hardware access is required. We have no need for specialized hardware access in our use-case, along with the additional operational overhead and maintenance.

Virtual Machines

Next, we consider virtualized machines (VMs) in EC2. This was AWS’ first compute offering and is a solid option for us to consider. We will have to manage the operating system within the VM, the binaries, and OS updates. This is the first option where we can leverage autoscaling where we automatically spin up additional VM’s in the cloud when traffic increases and scale down VM’s when traffic decreases. VM’s have significant flexibility but slowish autoscaling (>5 minutes) as we spin up additional VM instances and higher operational overhead compared to the next few options.

Containers

The next two options leverage containers which is one level of abstraction higher than a VM. Containers are a great middle ground option for a wide range of use-cases and are what we typically default to using. Containers provide the flexibility to provide your own binaries and container images, while being significantly lighter weight with lower memory and spin up times than a virtual machine. This means developers can spin up 10’s of containers running multiple services on their local machine for integration testing distributed changes and autoscaling the service can occur much more rapidly. With containers, a service will typically horizontally scale much more with smaller sized compute nodes which requires a container management technology to manage adding nodes to the service fleet and monitoring their health. The underlying server and VM’s that the containers are running on when deployed also need to be managed. The first option is to manage the VM’s ourselves using AWS Elastic Container Service (ECS) atop EC2 or using the very popular Kubernetes container orchestration technology atop (K8) EC2 or AWS EKS.

Kubernetes will be best for larger organizations with hundreds of services who have infrastructure teams to manage the compute and orchestration, along with large scale where cost optimization is a high priority and economies of scale become a factor.

“Serverless” compute options indeed rely on servers, but they aren’t servers you as an engineering team manage; rather they are abstracted away and managed by the cloud provider. This enables engineering teams to focus on their business logic and unique product offerings.

Container-as-a-service (CaaS) platforms like AWS Fargate offer a container as the compute abstraction enabling engineering teams to only focus on the container internals and not the underlying servers or VM’s. This is a fantastic option for smaller organizations without infrastructure teams or teams that want to be fully independent and decoupled where rapid development, low upfront investment, and team independence is a top priority. The final level of compute abstraction are Functions.

Functions / Lambdas

Functions-as-a-service (FaaS) like AWS Lambda offer the simplest and most abstracted compute option. They are the quickest to get started and fully abstract away all infrastructure from the engineering team, letting them focus on the business logic code exclusively. The billing model changes with Fargate from compute hours to cost-per-invocation - this results in Lambdas being very cost efficient for low-scale services or ones with highly intermittent traffic, but as scale increases or traffic becomes more consistent they can become quite costly.

Lambda’s have less flexibility than the less abstracted compute options in areas like in-memory caching, start-up scripts, or even which programming languages they support. AWS Lambda’s also shut down after 15 minutes which results in a “cold start” the next invocation which requires a new Lambda instance to be spun up, start-up scripts rerun, and caches repopulated - though there are workarounds for this, like keep-alive Lambda calls. Lambdas can only scale up so fast as well as you must rely on the cloud provider to spin up new instances. Therefore if traffic is especially spikey, such as with first-come-first-serve exclusive items, Lambdas may have challenges scaling out fast enough.

| On-Prem (Self-Hosted) | AWS Bare Metal | AWS EC2 (VM) | AWS EKS (K8) | AWS Fargate (CaaS) | AWS Lambda (FaaS) | |

|---|---|---|---|---|---|---|

| Simplicity | Complex ⇒ Simple | |||||

| Billing Type | Hardware | Server | Server | Server | Container | Invocation |

| Business Logic | custom | custom | custom | custom | custom | custom |

| Runtime | custom | custom | custom | custom | custom | managed |

| OS | custom | custom | custom | custom | managed | managed |

| Orchestration & Scaling | custom | custom | custom | mix | managed | managed |

| Virtualization | custom | custom | managed | managed | managed | managed |

| Physical | custom | managed | managed | managed | managed | managed |

We will leverage Kubernetes on EKS due to the scale we will operate at and the balance of flexibility, cost, and functionality it provides. We have large enough scale with consistent enough traffic to not use Lambdas, prefer lower operational overhead than on-prem, and prefer the improvements containerization provides to use EKS.

Persistence, Caching, File Storage, Search, and Databases

Each microservice requires a method for storing and retrieving data. The choice of data persistence depends on factors such as data volume, access patterns, rate of change, latency constraints, and consistency/availability/partitioning requirements. There are five classes of persistence we will leverage:

- In-Memory Caches

- File Storage Systems

- Search Indexes

- Relational Databases

- Non-Relational / NoSQL / Graph Databases

Caching

Caching is a critical mechanism for improving performance and reducing load on primary data stores. It is typically implemented in multiple layers, with the fastest cache layers checked first, followed by progressively slower ones. In-memory caches, the fastest option, can be centralized or distributed across hosts, depending on the use case.

Cached data may be accessed, updated, or evicted at various points depending on application requirements:

- Access Pattern: Caches can be accessed before, after, or concurrently with the primary data store.

- Update Strategy: Cached data may be updated only for a single server instance or propagated across a service cluster.

- Eviction Policies: Data may be evicted based on memory constraints or recency of access, using configurable policies like Least Recently Used (LRU) or time-to-live (TTL).

Types of Caches

We will consider four classes of in-memory caches, each suited to different needs:

- Local In-Memory Cache (e.g. Google Guava): Simple; data is updated only from that server instance, with no propagation to other server nodes.

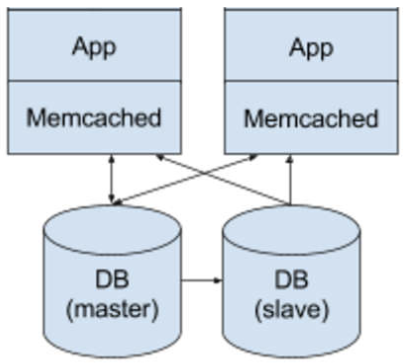

- Distributed On-Host In-Memory Cache (e.g. Memcached default): Data is updated in a centralized cache cluster and asynchronously propagated to other server nodes.

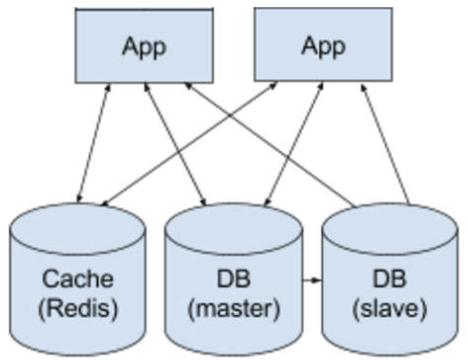

- Distributed Off-Host In-Memory Cache (e.g. Redis default): Data is updated in a centralized cluster and accessed directly by services rather than propagating to individual nodes.

- Database-Integrated Caching (e.g. DAX - DynamoDB Accelerator): Combines caching with the database layer for seamless access. Cached data is updated and stored alongside the database, providing a transparent performance boost for database calls.

Cache Update Strategies

Caches can be accessed and updated using different patterns, each with its own trade-offs. There are three cache update patterns we’ll consider:

- Write-through: Data is updated in the cache and in the primary persistence store concurrently. The operation returns successfully once writes to both are completed. This strategy’s advantage is in data consistency and fast retrieval of subsequent reads, whereas its disadvantage is in additional latency during writes. This is a common pattern for use-cases where data is written and then subsequently read quickly.

- Write-back: Data is updated in the cache only before the operation is successful; asynchronously in the background the primary persistence store is updated. This strategy’s advantage is in fast writes, whereas its disadvantage is in (a) the eventual consistency of requests made to other service instances while the primary persistence store has not yet been updated and (b) data loss if the cache is wiped prior to the primary persistence store being updated.

- Write-around: Data is updated in the primary persistence store only before the operation is successful. This strategy’s advantage is in reducing writes to the cache which may never be accessed, whereas its disadvantage is that the data needs to be retrieved from the slower primary persistence store upon it being requested or else outdated data will be read.

Cache Eviction Policies

To manage cache memory efficiently, eviction policies determine which data is removed when space is needed. Common policies include:

- First-in-first-out (FIFO): Evicts the oldest item.

- Last-in-first-out (LIFO): Evicts the newest item.

- Least-recently-used (LRU): Evicts the item accessed longest ago.

- Least-frequently-used (LFU): Evicts the item accessed least frequently.

We’ll implement a two-layer caching setup with on-host local caches (e.g. Google Guava) as the first caching layer and a distributed off-host in-memory cache cluster (e.g. Redis) as the second caching layer, along with an LRU cache eviction policy.

File Storage and Content Delivery Networks (CDNs)

File Storage is ideal for managing large datasets that are accessed in bulk. External file storage, for example in AWS S3, is well suited for data which is loaded in batch on service start-up. File storage is regularly used in areas such as translations, Machine Learning model outputs, and large precomputed datasets. External file storage is optimal for storing assets like images, videos, and documents too.

For files accessed directly by end users, optimizing latency is crucial to enhance the user experience. Content Delivery Networks (CDNs) offer a global network of Points of Presence (PoPs) to cache files closer to users, significantly reducing latency for asset retrieval. This is especially beneficial for static content like images or videos. For backend or non-customer-facing files, however, using AWS S3 is more cost-effective and avoids potential hotspots caused by CDN PoPs being accessed by service servers colocated within the same region.

Search

Search indexes are specialized data stores designed for high-speed keyword lookups using reverse indexing. Words are preprocessed to normalize variations, such as tenses, plurality, or synonyms, and then indexed alongside document references and their word locations. This results in very fast reads optimized for the search query use-case, whereas writes will be slow and are typically done asynchronously. This requires large amounts of storage to persist the reverse indexes, making search indexes pricey. Therefore search indexes typically only store the minimally required data and in a preprocessed form to minimize cost. The search index is typically updated and reindexed asynchronously as part of a nightly or weekly workflow.

Relational Databases

Relational databases are traditional databases leveraging rows and columns to store data in structured tables which may then be joined together to resolve SQL-based queries. Common relational databases include MySQL, MariaDB, and PostgreSQL. Relational databases are great for querying data which is relational in nature and/or requires strong ACID (Atomicity, Consistency, Isolation, Durability) guarantees. Common use cases include payment processing systems or structured datasets like relational shopping data.

While relational databases excel at complex queries and relationships, as scale grows, designing tables and queries for performance becomes increasingly critical. Relational databases can struggle with very large datasets containing billions of rows and heavy write loads or complex joins. Moreover, relational databases use strongly-typed schemas, ensuring consistency but often requiring data migrations when schema updates are needed.

Non-Relational Databases

Non-relational databases encompass a variety of database types, each suited for specific use cases. These include key-value stores, document databases, column-oriented databases, time-series databases, vector databases, and graph databases.

Key-Value and Document Databases

Key-value stores and document databases map a key to either a value or a structured document. Key-value stores treat the value as opaque, which can include simple strings, numbers, or complex objects like JSON. Document databases expand on this by structuring the value in a database-readable format, enabling enhanced indexing and queries.

These databases are excellent for use cases requiring fast reads of indexed data or handling high write volumes. However, they are less effective for relational queries involving multiple tables, as joins must be implemented within application logic. Additionally, they often lack strong ACID guarantees and typically use a proprietary query language instead of SQL.

Popular key-value and document databases include AWS DynamoDB, MongoDB, and CouchDB. DynamoDB, in particular, was quite groundbreaking when it launched, supporting very large data datasets, high concurrent write volume, fast querying, strong availability guarantees, and all managed by AWS eliminating operational costs typically associated with relational databases. DynamoDB has been successfully used across many of the largest software technology companies at massive scale. MongoDB and CouchDB have remained popular as well, offering flexible options for handling semi-structured data.

Column-oriented Databases

Column-oriented databases excel in analytical workloads, such as OLAP (Online Analytical Processing). Unlike traditional row-oriented databases, they store data in columns rather than rows, enabling efficient aggregation and querying of specific fields across large datasets. They don’t require all rows in a table to contain the same set of columns and can reduce disk storage requirements with improved compression.

Whereas traditional row-oriented databases can retrieve or update all data for an object in a single disk operation, column-oriented databases require multiple disk operations. This makes column-oriented databases less suited for transactional workloads reading and writing entire items at a time. Wide-column databases address some of these challenges by grouping related columns for optimized access.

Common use-cases for column-oriented databases include: aggregation queries, analytical processing (e.g. OLAP), and application performance monitoring (e.g. APM). Three common column-oriented databases include: Apache Casandra, Amazon Redshift, and Google BigTable.

Time-series Databases

Time-series databases (TSDB) are optimized for storing and querying data changing over time. This makes them ideal for metrics, application monitoring (e.g. APM), and supporting the internet-of-things. Data is first stored at the lowest time unit, for example at every 1 second interval, and depending on configuration at other interval granularities as well. Over time, to optimize on storage and query efficiency, time-series databases will automatically downsample/aggregate the data across time intervals into larger intervals (e.g. 1 hour) as a given event becomes older and older. This typically works well as data further away in time does not need the same level of time granularity for most use cases. For example, when storing a user metric we may first store it at a 1 second interval, then as time goes on, say in two months time, we may only need to query that metric at a 1-day interval - here the database will automatically downsample/aggregate the data into the larger interval. When a query comes in it is very fast as the data does not need to be aggregated in real-time. Time-series databases are one of the fastest growing classes of non-relational databases as of this writing and popular options include: InfluxDB, Prometheus, and AWS Timestream.

Vector Databases

Vector databases have become increasingly essential for Machine Learning (ML) and Artificial Intelligence (AI) use-cases to store vector embeddings. Vectors are matrix-like geometric objects with a fixed number of dimensions that encode the relationships between data points, an underpinning of Machine Learning and Deep Learning Neural Network models. Vector databases are optimized for large-scale vector data and can perform high-speed computations involving vectors, such as matrix calculus, often leveraging GPUs for fast parallelized operations of multidimensional matrices. Common vector database options include Pinecone, Milvus, and Qdrant.

Graph Databases

The final class of non-relational databases we’ll cover are graph databases. Graph databases are optimized for storing the relationships between highly-connected entities within a graph. Rather than storing data in rows or columns, graph databases store data as a graph of nodes and edges between nodes making them optimal for modeling data such as friends in a social network, route optimization, and pattern recognition like fraud detection. Three common graph databases include Neo4j, VertexDB, and AWS Neptune. Also see Graph Theory.

Infrastructure-as-Code

We’ve discussed the compute and persistence layers of the microservices we are designing; next let’s focus on how we will manage this infrastructure.

Infrastructure-as-Code (IaC) is the practice of defining infrastructure through code, offering several significant advantages:

- Version control for infrastructure changes

- Replicability of environments with full parity

- Reproducibility for spinning up and tearing down environments

- Reduced human error through automation

- Faster provisioning compared to manual setup

AWS CloudFormation

One popular tool for managing infrastructure in AWS is AWS CloudFormation. CloudFormation uses a custom Domain-Specific Language (DSL) to define and manage AWS infrastructure. While CloudFormation standardizes infrastructure management, it requires learning a new templating language and understanding the specific properties for each AWS service (e.g., EC2 vs. Fargate). Additionally, CloudFormation lacks native programming tools such as autocomplete, linting, and testing.

AWS CDK

An improvement over AWS CloudFormation is the AWS Cloud Development Kit (CDK). The CDK allows engineers to define infrastructure using native programming languages like Python and Typescript with programming tools like autocomplete, testing, and linting. The CDK code is then compiled into CloudFormation templates, which are submitted to AWS for deployment. This approach enables faster development and better integration with common software development workflows.

Terraform and Third-Party Infrastructure Management Tools

There are also independent third-party tools that offer more flexibility by supporting multiple cloud providers and a variety of additional features:

- Terraform (Hashicorp)

- Ansible (Red Hat / IBM)

- Puppet

- Chef

Terraform is particularly powerful as it directly interacts with cloud provider APIs, rather than using individual provider-specific DSLs like CloudFormation. This allows Terraform to support new cloud features before the official templating languages of cloud providers are updated. Additionally, Terraform stores a centralized state of the infrastructure, which allows for better tracking of changes, diffs, and rollback functionality. This is especially useful in large teams where multiple engineers are working on the same infrastructure.

We will use Terraform as we’re using Kubernetes and this keeps us flexible across cloud providers.

Secrets Management

Each microservice we design will require secure storage for highly sensitive service credentials such as cryptographic encryption keys, API keys, passphrases, and tokens. This data will be required during development on each engineer’s local machine; during integration on a remote server, potentially accessible by multiple teams and functions; and in production at full scale in a live environment.

Given the critical nature of these credentials and the grave impact of security breaches, it is critical we have granular access control and auditing capabilities. At a very small scale with limited team members, secrets management could theoretically be handled with a rudimentary solution through manual configuration. However, this is error-prone, misses out on a variety of security controls, and will quickly become unsustainable as the organization scales. With even more server nodes, more deployment environments, and more engineers, a tool for managing secrets becomes even more essential. Under no circumstances should sensitive data be stored in code repositories or raw on-disk formats.

Secrets management requirements:

- Environment-Specific Secrets: Secrets per environment and service (e.g. dev, staging, prod)

- Per-Engineer Local Secrets: During development, engineers will have unique local secrets injected into their environment through a CLI.

- Integration with CI/CD Pipelines: In cloud environments like staging and production, secrets will be securely injected into container environment variables through the CI/CD pipeline.

- Auditing and Granular Access Control: Every access to a secret must be logged, and permissions should follow the principle of least privilege.

There are a number of robust secrets manager tools:

- HashiCorp Vault

- AWS Secrets Manager

- Microsoft Azure’s Key Vault

- Lyft’s Confidant

We will use HashiCorp Vault, aligning with our choice of Terraform and maintaining flexibility across cloud providers. Vault supports dynamic secrets, secret revocation, and comprehensive auditing. We will self-host Vault on containers running in AWS with backup options for disaster recovery.

Data Composition and GraphQL

With data distributed across multiple services, subsystems, and domains, composing all the data into a unified model designed for client applications presents a significant challenge. Frontend and mobile engineers must deeply understand each domain model and manually stitch data together, leading to:

- Duplication of Logic: Across web and mobile clients.

- Deployment Constraints: Mobile apps are restricted by app store guidelines and release cycles.

- Network Inefficiency: Additional network requests and larger data payloads degrade the user experience, increase latency, and drain mobile battery life.

Leveraging a data composition and orchestration layer between our edge router and individual domain services will reduce the duplication of logic across web and mobile clients, ensure release flexibility, and minimize network requests between the client and our services by keeping the data composition logic and serial API calls inside our network. This occurs because:

- Excessive Data Over-the-Wire: Backend services will often respond with much more information than is required by the client which reduces networking performance

- Serial Network Calls: Nested data often requires serial calls made across the network which can be very slow and at times unreliable (e.g. fetching cart item id’s, then details for each).

For example, without a backend composition layer, rendering a shopping cart could require 20+ network calls from a mobile client in three serial batches: fetching cart product ids, fetching color/style options for each, and retrieving details and prices about each product color/style.

Data Composition Options

Let’s look at three option for combining all this data together:

- Thick Mobile Client: Data is composed directly in the mobile or web app. Only consider if supporting one client and updates are infrequent, optimizing for simplicity.

- Custom Composition Service(s): Custom logic in backend composition services is written to compose data. Each new client query requires a code change. Consider if supporting only a few clients or all clients only require a standard set of queries which change infrequently.

- Federated GraphQL Gateway(s): Standardizes composition across services with a standard query language. One graph across all services is maintained, exposed either through a centralized backend service or through a per-domain gateway (federation) for isolation and organizational ownership. Consider when supporting a variety of clients or client queries are updated frequently.

Option 1: Thick Client

In this model, business logic in the client integrates directly with domain service APIs. For example, a shopping cart client might integrate with APIs from Cart, Product Detail, Customer Profile, Payment Option, and Delivery Option services. Each API will return data which will include data not required to power that client experience and serial sequenced network calls must wait on mobile network round trips incurring significant latency. Together the superfluous data-over-the-wire and unnecessary network round trips degrade the user-experience and drain battery.

Option 2: Custom Composition Service(s)

In this model, custom backend services are created to shift the business logic for composition off the clients to the backend. This improves release cadence flexibility, reduces code duplication across web and mobile OS’s, and improves the customer experience with fewer network round trips and minimized data-over-the-wire. Over time organization’s may see a proliferation of these custom composition services across multitudes of bespoke apps and experiences with each backend composition service requiring engineering and then ongoing maintenance, operations, and cost. Everytime a frontend mobile or web app is building a new feature they need to consult the relevant backend composition service and add any new logic for the new data requirements - this slows time-to-market for new features, over time can increase complexity, and may introduce organizational questions on who is to build and then maintain these aggregation services and custom code to power each bespoke frontend experience.

Option 3: Federated GraphQL Gateway(s)

Option-3 builds an overarching data graph across services and domains. Clients requiring data from any combination of services can be queried together in a single client query with only that data and no more returned. Each domain will have its own sub-graph and servers which host it, but all the data is composed (“stitched”) together into an overarching federated graph, exposed through a common set of GraphQL gateways.

A federated GraphQL implementation where each domain owns a sub-graph of the data, which is then composed together, along with a cellular-based architecture where multiple clusters of our overarching graph are deployed independently bring a number of benefits. It improves system reliability and removes single-points-of-failure, while decoupling teams changes and deployments from each other. This gives each domain control over their portion of the graph, operating it, and deploying it, while the overarching graph across domains and services is provided to frontend web and mobile clients.

Benefits:

- Simplifies client queries: Data from multiple services can be fetched in a single request, returning only the required data.

- Improves network efficiency: Minimizes round-trips and reduces payload size.

- Accelerates feature delivery: Frontend engineers can create and iterate on queries without backend changes.

- Decentralization: Domains manage their sub-graphs independently, enabling team autonomy and removing single-points-of-failure.

- Scales effectively: Supports a wide variety of clients with diverse data needs.

Given we will not have many unique clients with a multitude of disparate data querying needs we could either start with Option 2 or we could go right to Option-3 for future extensibility. We will leverage Option-3 with a common overarching data graph, exposed through a set of isolated composition gateways using GraphQL. This will speed-up time-to-market for new user-facing features going forward with mobile engineers able to rapidly create and iterate on new queries across domains and services in seconds, while also minimizing long-term operational maintenance over Option-2. This also ensures optimal networking performance with minimal network round-trips and data payloads over Option-1.

Real-time Notifications and Data

When drivers are operating in on-demand mode and are offered an on-demand route, they require timely notifications on their mobile devices. Likewise, while drivers are viewing available schedule-ahead routes, the data must be kept continuously updated.

Push Notifications

To send push notifications to drivers mobile devices, rather than build a custom notification system, we can simply leverage standard mobile push notification functionality through a third-party provider such as AWS Simple Notification Service (SNS), Airship, or Twilio. These services provide easy APIs we will integrate with to send push notifications to drivers. For further reading on mobile push notifications, see Apple Push Notification Service (APNs) for iOS and Notifications for Android; similarly for Web, the W3C Push API using Service Workers.

Real-time Data

There are several technologies available to provide customers real-time data to clients from short-polling, to long-polling, to Web Sockets, to Web Push, to WebRTC.

Short Polling

In this model, the client sends a new request to the server at a frequent interval (e.g. 3 seconds) and the server returns immediately with any new data. This minimizes connection time to free up resources, but incurs a lot of traffic volume.

Long Polling

With long polling, the client still continuously sends requests to the backend service at configured intervals, but much less frequently (e.g. 30 seconds). The connection is held open until the backend server responds with a data change or the connection times out and a new connection starts. Compared to short polling, this reduces traffic volume and increases connection times. The increased connection time can be a challenge at scale for IO concurrency models spawning a thread-per-connection, but can work very effectively for asynchronous IO where many connections can be maintained efficiently. Long polling is straightforward to implement and may effectively meet data freshness requirements without the complexity of more advanced technologies we’ll discuss next.

Web Sockets

A real-time bidirectional communication technology such as Web Sockets or WebRTC (typically for audio/video) can provide true bidirectional initiated updates. WebSockets provide a persistent, bidirectional communication channel between the client and server, enabling immediate data transmission as events occur. A Pub/Sub system maintains a mapping of clients and the data they are subscribed to. Then whenever data is updated all subscribers are pushed the fresh data. This is particularly beneficial for applications requiring high-frequency updates (e.g. <1 second), such as real-time messaging (e.g. Slack) and collaboration tools (e.g. Google Docs). Web Sockets brings additional complexity though, as the backend service becomes stateful, maintaining connections with clients, and therefore requires new consideration in how it is deployed and how the service scales.

GraphQL Subscriptions

GraphQL Subscriptions meld a GraphQL data graph with Web Sockets and a Pub/Sub system to facilitate real-time updates. Clients create a query for the data they require and then when any data within the query changes, they are pushed the updates.

Our Approach

With deferred resolution we have designed this system to slow down the market and so schedule-ahead route data will be stable for as long as the deferred resolution window is configured for (e.g. thirty seconds). Thirty seconds is a long time in the world of web requests and so using a real-time bidirectional communication technology such as Web Sockets with a Pub/Sub system will be overkill at first. Instead, while a driver views available routes, the mobile app will simply long poll the backend service at the configured interval whether thirty seconds, five minutes, or each hour.

In the future, as our platform evolves we may require more sophisticated real-time bidirectional data between frontend clients and our backend. At that time, we will leverage Web Sockets on top of a Pub/Sub service like Redis exposed through GraphQL Subscriptions to allow individual drivers to subscribe to a region, route, or other data and receive real-time updates as that data changes. Until then, we will simply leverage long polling for our near real-time data needs.

Conclusion

In this post, we covered the backend infrastructure and outlined the service architecture. Next, in Parts 3-8, we will delve into the design of each of our domains, subsystems, and services, beginning with the Vendor and 3rd Party Checkout.